Docker Bind Mount: Keeping Your Containers Updated

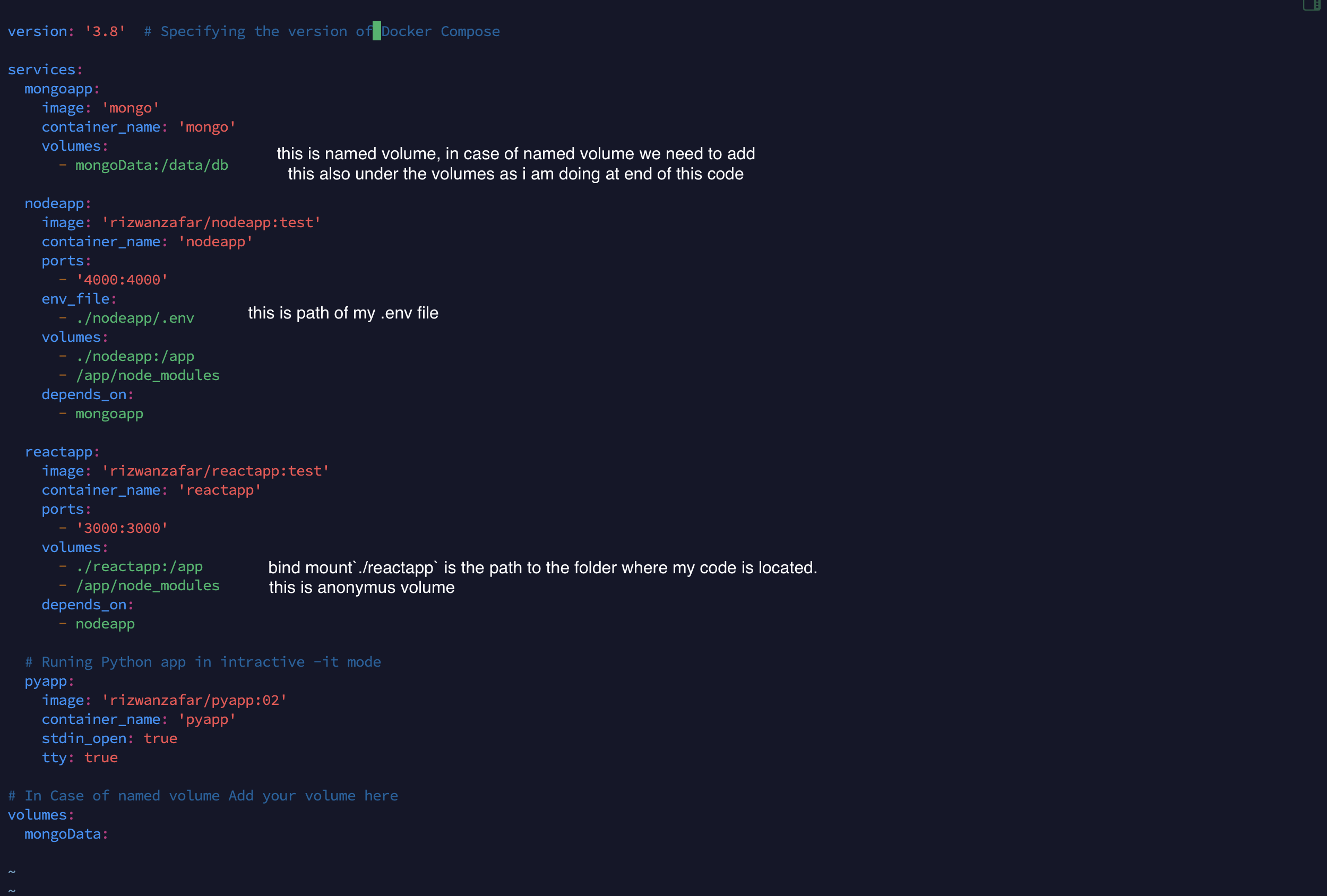

When working with Docker, you might encounter scenarios where a local file needs to be in sync with a file inside a Docker container. This is where Docker bind mounts come into play. Bind mounts allow you to bind a file or directory on your host machine to a file or directory inside your container. Bind mount is part of docker volume here are some key difference between anonymes volum , named volume and bind mount

What is a Docker Bind Mount?

A Docker bind mount allows a file or directory from your host machine to be directly accessible within a container. This means that any updates to the local file are immediately available inside the container.

Example Scenario

Consider you have a file located at `/opt/issues.txt` on your host machine. Your Docker container is designed to manage and process issues listed in this file. However, if the `/opt/issues.txt` file is updated on the host machine, the Docker container won't see these updates because the file exists outside the container.

To solve this, you can use a bind mount to link the `/opt/issues.txt` file on your host machine with a file inside the Docker container. This way, any updates to the local file are instantly reflected inside the container.

How to Use Docker Bind Mount

Here's a step-by-step example of how to set up a bind mount:

1. **Identify the local file and the target path inside the container**:

note: make sure the local path is the absolute

- Local file: `/opt/issues.txt`

- Target path inside the container: `/container/issues.txt`

2. **Run the Docker container with the bind mount**:

```bash

docker run -d -v /opt/issues.txt:/container/issues.txt --rm imageID

```

Breakdown of the Command

- `docker run`: Starts a new Docker container.

- `-v /opt/issues.txt:/container/issues.txt`: The `-v` flag specifies the bind mount. The local file path (`/opt/issues.txt`) is mapped to the target path inside the container (`/container/issues.txt`).

- `--rm`: Automatically removes the container when it exits.

- `imageID`: The ID of the Docker image you want to run.

3. By default, bind mounts in Docker are set to read and write mode. This allows changes made outside the container to be reflected inside it, but it also means the container can modify your external files, which can be risky. To enhance security and maintain the integrity of your files, you can use read-only bind mounts. This setup ensures that while you can update files from outside the container, the container itself cannot alter your external files or code. Using read-only bind mounts is a best practice for protecting your development environment and ensuring consistency.

command

```bash

docker run -d -v /opt/foldername:/container/foldername:ro --rm imageID

```

Note : above step will make each and everything readonly but let in case you want something should be writeable for container as well then we can do this by adding anonymous volume .

```bash

docker run -d -v /opt/foldername:/container/foldername:ro -v /container/folder_want_to_readable --rm imageID

```

Benefits of Using Bind Mounts

- Immediate Updates: Any changes to the local file are instantly available inside the container.

- Persistent Data: Data in bind-mounted files persists even if the container is removed, making it ideal for development and debugging.

- Flexibility: Easily share files between the host and the container without rebuilding the Docker image.

Conclusion

Docker bind mounts are a powerful feature that helps you keep your containers synchronized with the host machine. By using bind mounts, you can ensure that your container has access to the most up-to-date information, improving the efficiency and reliability of your containerized applications.

Optimize your workflow and keep your containers updated with Docker bind mounts!

Docker, bind mount, Docker container, local file sync, Docker run, container synchronization, Docker volumes, persistent data.

Meta Description: Learn how to use Docker bind mounts to keep your containers synchronized with local files. Ensure your Docker container always has the latest updates from your host machine.

.png)

.jpeg)

.jpeg)

.png)

.png)

.jpeg)

.jpeg)