Docker Commands Cheat Sheet: From Basics to Advanced

1. Docker Installation

- Command: `docker --version`

- Description: Check Docker installation and version.

Example: docker --version`

Output: `Docker version 20.10.7, build f0df350`

2. Docker Images

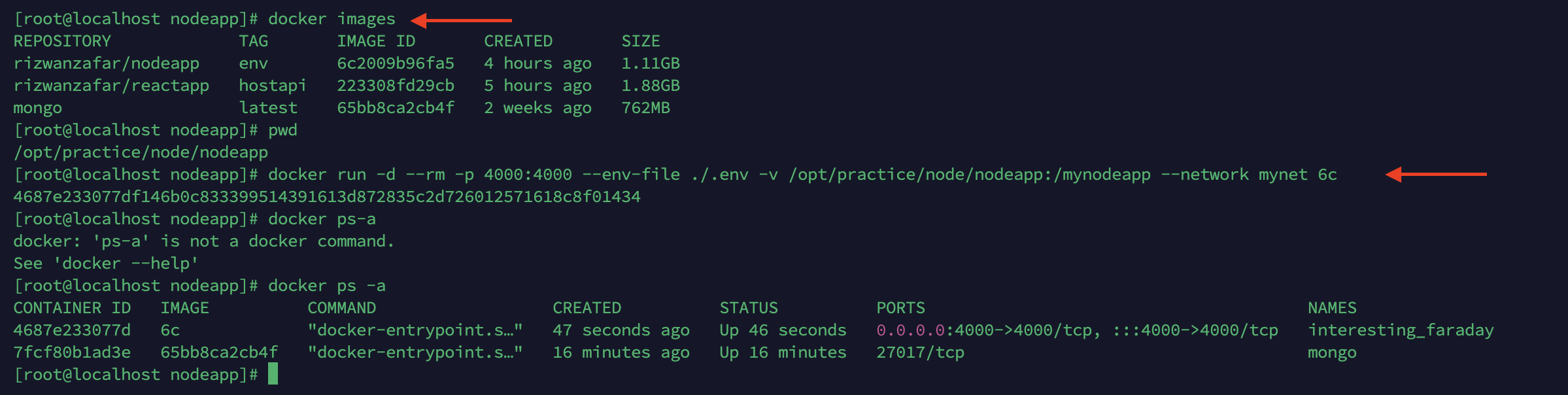

List Docker Images

- Command: docker images

- Description: Lists all Docker images on your local machine.

Example: docker images

Output: Lists all images with columns: REPOSITORY, TAG, IMAGE ID, etc.

Pull a Docker Image

- Command: docker pull [image_name]

- Description: Downloads a Docker image from a registry (e.g., Docker Hub).

Example: docker pull nginx

Output: Downloads the latest nginx image.

Remove a Docker Image

- Command: docker rmi [image_id]

- Description: Removes a Docker image from your local machine.

Example:docker rmi 7b28eabc0405

Output: Deletes the image with the specified ID.

Search for a Docker Repository

- Command:

docker search [repository_name] - Description: Searches Docker Hub for repositories that match the given name or keyword.

Example:

docker search rizwanzafar/pyappOutput: Displays a list of public repositories related to "rizwanzafar/pyapp" if available on Docker Hub.

3. Docker Containers

Run a Docker Container

- Command: docker run [image_name]

- Description: Creates and runs a new container from a specified image.

Example: docker run nginx

Output: Runs an nginx server in a container.

Run a Docker with name

- Command: docker run --name myContainer [image_name]

- Description: Creates and runs a new container from a specified image with name.

Example: docker run --name reactapp rizwanzfar/reactapp

Output: Runs an reactapp server in a container name reactapp.

Run a Docker on port

- Command: docker run -p computerPort:containerPort [image_name]

- Description: Creates and runs a new container from a specified image on our computer port.

I wrote 3000 bcoz I know react by default run on port 3000 in container

Example: docker run -p 5000:3000 rizwanzfar/reactapp

Output: Runs a reactapp server on our system at port 5000.

List Running Containers

- Command: docker ps

- Description: Lists all currently running containers.

Example: docker ps

Output: Displays active containers with details like CONTAINER ID, IMAGE, etc.

List All Containers

- Command: docker ps -a

- Description: Lists all containers, including stopped ones.

Example: docker ps -a

Output: Shows all containers with their statuses.

Stop a Running Container

- Command: docker stop [container_id]

- Description: Stops a running container.

Example: docker stop d4c3d4c3d4c3

Output: Stops the container with the given ID.

Remove a Docker Container

- Command: docker rm [container_id]

- Description: Deletes a stopped container.

Example: docker rm d4c3d4c3d4c3

Output: Removes the container with the specified ID.

Start a Stopped Container

- Command: docker start [container_id]

- Description: Starts a container that has been stopped.

Example: docker start d4c3d4c3d4c3

Output: Restarts the container.

Run a Container in Detached Mode

- Command: docker run -d [image_name]

- Description: Runs a container in the background (detached mode).

Example: docker run -d nginx

Output: Runs nginx in the background and outputs the container ID.

4. Docker Volumes

Create a Docker Volume

- Command: docker volume create [volume_name]

- Description: Creates a new Docker volume.

Example: docker volume create my_volume

Output: Creates a volume named `my_volume

List Docker Volumes

- Command: docker volume ls

- Description: Lists all Docker volumes on your system.

Example: docker volume ls

Output: Lists all volumes with details.

Remove a Docker Volume

- Command: docker volume rm [volume_name]

- Description: Deletes a Docker volume.

Example: docker volume rm my_volume

Output: Deletes the volume my_volume

5. Docker Networking

List Docker Networks

- Command: docker network ls

- Description: Lists all Docker networks.

Example: docker network ls

Output: Lists all networks, e.g., bridge, host, etc.

Create a Docker Network

- Command: docker network create [network_name]

- Description: Creates a new custom Docker network.

Example: docker network create my_network

Output: Creates a network named my_network

Connect a Container to a Network

- Command: docker network connect [network_name] [container_id]

- Description: Connects a running container to an existing network.

Example: docker network connect my_network d4c3d4c3d4c3

Output: Connects the specified container to my_network

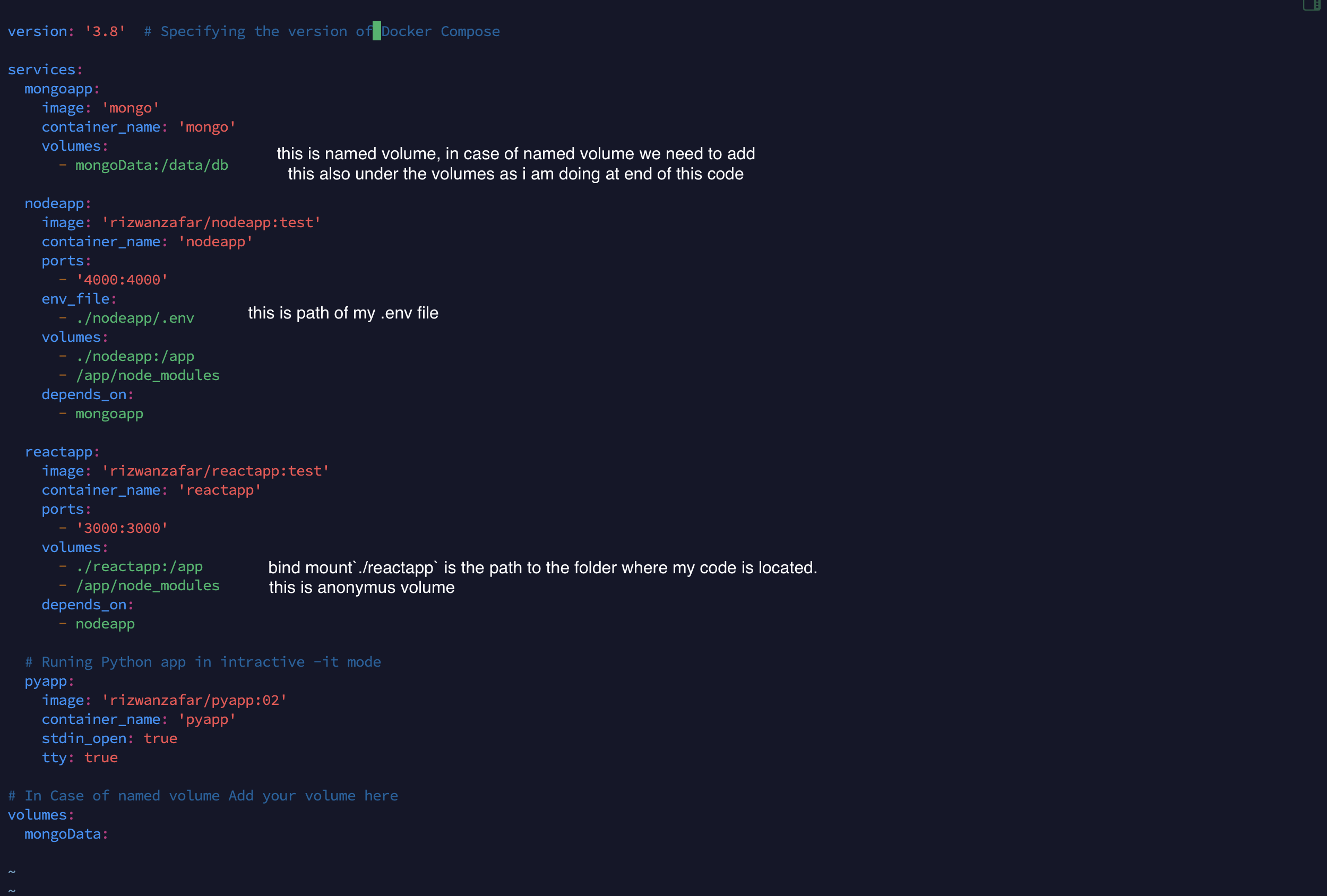

6. Docker Compose

Run Docker Compose

- Command: docker-compose up

- Description: Builds, (re)creates, starts, and attaches to containers for a service.

Example: docker-compose up

Output: Starts all services defined in the docker-compose.yml

Stop Docker Compose

- Command: docker-compose down

- Description:** Stops and removes all containers, networks, and volumes defined by the docker-compose.yml

Example: docker-compose down

Output: Stops and cleans up the Docker Compose environment.

Build and Run Containers in Detached Mode

- Command:

docker compose up -d --build - Description: Builds the images (if not already built) and starts the containers defined in a

docker-compose.ymlfile in detached mode (in the background). The--buildflag forces a rebuild of the images before starting the containers.

Example:

docker compose up -d --buildOutput: Rebuilds the Docker images (if necessary) and runs the containers in the background, allowing you to continue using your terminal.

7. Docker Advanced Commands

Inspect a Docker Container

- Command: docker inspect [container_id]

- Description: Displays detailed information about a container.

Example: docker inspect d4c3d4c3d4c3

Output: Shows JSON output with details of the container.

View Container Logs

- Command: docker logs [container_id]

- Description: Fetches and displays logs from a container.

Example: docker logs d4c3d4c3d4c3

Output: Displays the container’s logs.

Run a Command in a Running Container

Command: docker exec [container_id] [command]

Description: Executes a command inside a running container.

Example: docker exec d4c3d4c3d4c3 ls /var/logs

Output: Lists logs inside the container.

Prune Unused Docker Resources

- Command: docker system prune

- Description: Removes all stopped containers, unused networks, and dangling images.

Example: docker system prune

Output: Frees up space by removing unused Docker resources.

Build and Push an Image to Docker Hub

- Command: docker build -t [repository_name]:[tag]. && docker push [repository_name]:[tag]

- Description: Builds an image from a Dockerfile and pushes it to Docker Hub

Example: docker build -t myrepo/myimage:v1 . && docker push myrepo/myimage:v1

Output: Builds and uploads the image to your Docker Hub repository.

8. Docker Swarm (Orchestration)

Initialize Docker Swarm

- Command: docker swarm init

- Description: Initializes a new Docker Swarm cluster

Example:docker swarm init

Output: Initializes the Swarm and displays the join token.

Deploy a Stack to Docker Swarm

- Command: docker stack deploy -c [stack_file] [stack_name]

- Description: Deploys a stack (collection of services) to Docker Swarm

Example: docker stack deploy -c docker-stack.yml mystack

Output: Deploys the stack defined in docker-stack.yml

Update a Service in Docker Swarm

- Command: docker service update [service_name]

- Description: Updates the configuration of an existing service in the Swarm.

Example: docker service update --replicas 5 myservice

Output: Scales the service to 5 replicas

Conclusion

This cheat sheet covers the essential Docker commands you'll need from basic container management to advanced orchestration with Docker Swarm. Each command includes a brief description and an example, making it easy to follow along and apply to your Docker projects.

This format is SEO-friendly and optimized for readers looking for quick, actionable information on Docker commands.

.png)

.png)

.png)

.png)

.png)

.jpeg)

.jpeg)

.jpeg)

.jpeg)